When Anthropic announced Claude for Life Sciences on October 20, 2025, it marked the company's first formal entry into a specific vertical market. But this is not simply Claude with some biology training data added. The platform's architecture suggests a deliberate strategy: position Claude as an operating system for scientific R&D by embedding it directly into the tools researchers already use every day.

The announcement comes at a moment when the life sciences industry faces a data crisis. High-throughput technologies like next-generation sequencing generate petabytes of complex data. Systematic literature reviews take an average of 18 months to complete manually. Clinical trials cost approximately 1B+ drug development budget, and 80% face recruitment delays. Against this backdrop, Anthropic is betting that the value of AI in science comes not from raw capability alone, but from integration into existing workflows.

Three Pillars: Building More Than a Chatbot

Claude for Life Sciences rests on three architectural components that work together to transform the model from a conversational AI into a workflow orchestrator.

Advanced Scientific Reasoning

At the foundation is Claude Sonnet 4.5, which scores 0.83 on Protocol QA, a benchmark specifically designed to test understanding of laboratory protocols. This score exceeds both its predecessor Sonnet 4 (0.74) and the human expert baseline (0.79). The model also shows improvements on BixBench, an evaluation for bioinformatics tasks.

This level of performance represents a threshold. To be useful in scientific work, a model cannot just retrieve information or generate plausible text. It must comprehend the precise steps, conditional logic, and specialized terminology of experimental procedures. The ability to interpret a laboratory protocol moves the model's function from passive summarization to active comprehension of scientific process.

Connectors: Embedding Into Existing Workflows

A foundational model without access to specialized tools remains isolated, no matter how capable. Anthropic addresses this through Connectors, which are APIs that allow Claude to interact directly with scientific software platforms.

Current integrations include Benchling (the R&D data management platform used in many labs), 10x Genomics (for single-cell analysis through natural language queries), PubMed (for biomedical literature), and BioRender (for scientific figures). Additional enterprise integrations span Google Workspace, Microsoft SharePoint, Databricks, and Snowflake.

This connector strategy transforms Claude from an isolated tool into an integrated orchestrator. By embedding within industry-standard platforms rather than forcing users to adopt new workflows, Anthropic maximizes utility while creating platform stickiness. Once an entire data pipeline flows through Claude's connectors, switching costs become substantial.

Agent Skills: Reproducible Workflows

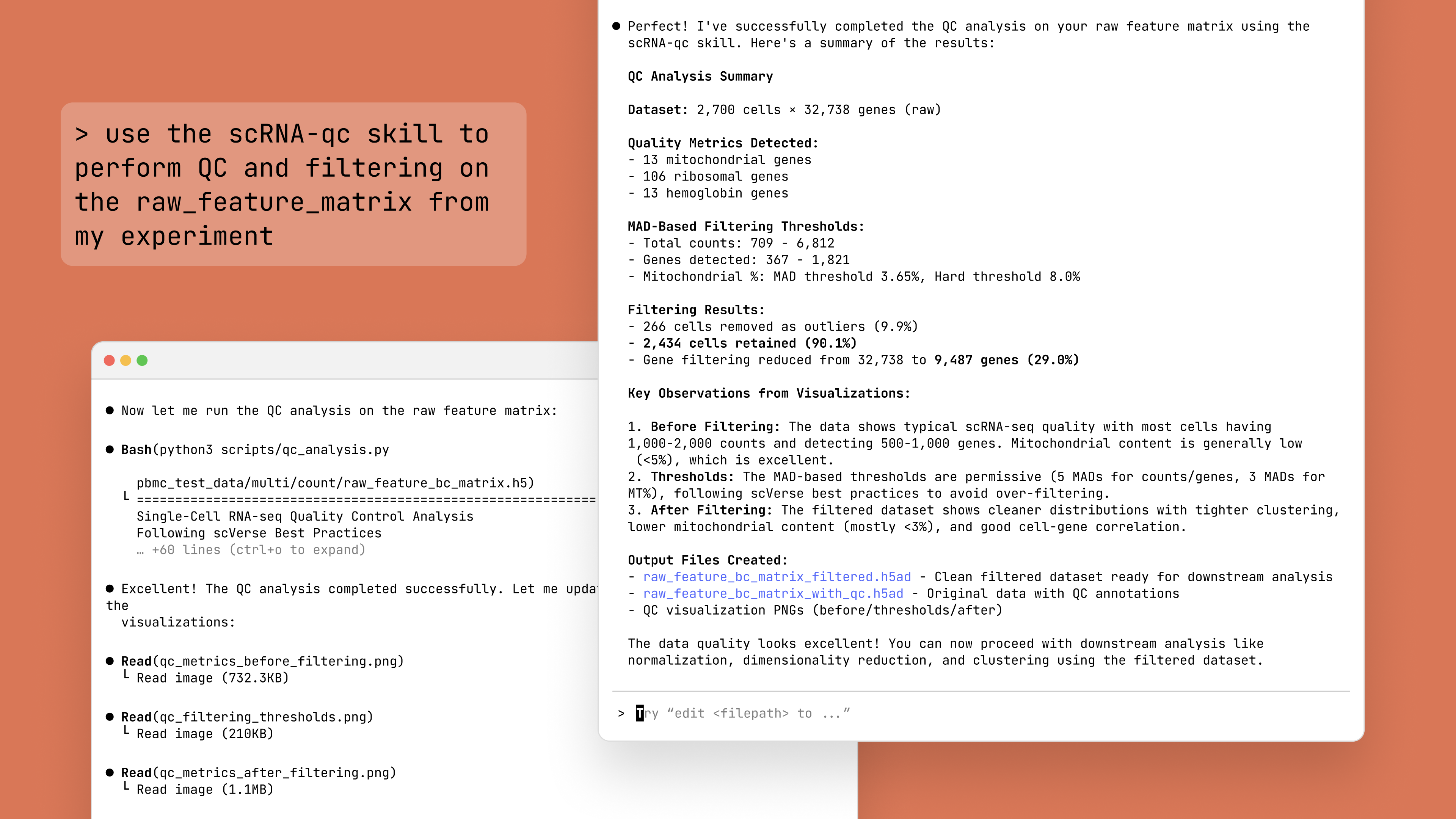

The third pillar addresses reproducibility, a persistent challenge in computational science. Agent Skills are pre-packaged workflows that enable Claude to execute specific, multi-step protocols consistently.

The first released skill is single-cell-rna-qc, which performs quality control on single-cell RNA sequencing data following scverse ecosystem best practices. This choice is strategic. Single-cell genomics is experiencing explosive growth but generates massive, complex datasets. Quality control is a universal, tedious, yet non-negotiable first step in any analysis.

By encapsulating this ubiquitous bottleneck into a simple, callable skill, Anthropic provides immediate tangible value while building familiarity with delegating analytical workflows to AI. This initial skill serves as an entry point: once researchers rely on Claude for QC, they become receptive to more advanced skills for clustering, cell type annotation, and differential expression analysis.

Strategic Partnerships: Borrowing Credibility

Anthropic has assembled partnerships across the pharmaceutical value chain. The pattern reveals a strategy of co-development with established industry leaders to bridge the trust gap inherent in deploying probabilistic AI systems in high-stakes, regulated environments.

| Organization | Sector | Stated Use Case |

|---|---|---|

| Sanofi | Major Pharma | Daily use in "Concierge app" with internal knowledge libraries across the value chain |

| Novo Nordisk | Major Pharma | Setting a "new standard in document and content automation in pharma development" |

| Genmab | Biotech/Oncology | Creating GxP-compliant outputs from clinical data sources |

| Schrödinger | Computational Chemistry | Using Claude Code for platform development with up to 10x speed improvements |

| Axiom Bio | Drug Safety | Building agents to query databases and predict clinical drug toxicity |

| 10x Genomics | Genomics Tools | Single-cell and spatial analysis via natural language |

| Broad Institute | Academic Research | Building AI agents on Terra platform |

| Stanford University | Academic Research | Developing "Paper2Agent" to turn research papers into interactive agents |

Source: Claude for Life Sciences announcement

These partnerships serve multiple functions. Sanofi's "Concierge app," used daily by "most Sanofians," demonstrates enterprise-scale adoption. Schrödinger's reported 10x speed improvements in code development provide concrete performance validation. Genmab's work on GxP-compliant outputs signals ambitions in the highly regulated clinical space. Academic partnerships with the Broad Institute and Stanford provide scientific credibility.

This partner roster is not merely marketing. It represents third-party validation from respected institutions, effectively borrowing their credibility to de-risk adoption for the broader industry.

From Literature Review to Lab Automation: Current Applications

Partner reports reveal concrete use cases across the drug development pipeline, though the degree of integration varies substantially.

Literature Analysis and Hypothesis Generation

FutureHouse uses Claude for literature analysis workflows, specifically highlighting its accuracy in analyzing figures within scientific papers. This capability addresses a genuine bottleneck: much critical data in biomedical research appears in visual formats like charts and diagrams that are inaccessible to text-only models.

Claude's 1M token context window (approximately 150,000 words or 500+ pages) enables simultaneous analysis of dozens of research papers. This addresses the time constraint: literature reviews that take 18 months manually can potentially be compressed dramatically.

Bioinformatics and Code Development

Schrödinger reports that Claude Code helps turn ideas into working code with speed gains up to 10x in some cases. Axiom Bio reports using billions of tokens in Claude Code to build agents for predicting drug toxicity.

The 10x Genomics integration enables researchers to perform complex analytical tasks through conversational prompts rather than command-line scripts. Serge Saxonov, 10x Genomics CEO, states: "Now, researchers can begin to interact with their data through natural conversation, without needing a computational background."

This democratization is significant. The barrier to entry for sophisticated computational methods has historically limited which researchers can extract insights from their own data. Lowering this barrier expands the user base for advanced genomic techniques.

Clinical Documentation and Regulatory Compliance

Novo Nordisk reports setting a "new standard in document and content automation in pharma development." The process of developing a new drug generates substantial paperwork: study protocols, investigator brochures, informed consent forms, clinical study reports, and regulatory submissions.

Genmab highlights the potential to create "GxP-compliant outputs" from clinical data sources. GxP (Good Practice) regulations ensure pharmaceutical products are safe, effective, and of high quality. The ability to generate documentation that adheres to these stringent standards is crucial for any AI tool used in late-stage development, though the technical and validation challenges remain substantial.

Enterprise Knowledge Management

Sanofi has integrated Claude with internal knowledge libraries to power its "Concierge app." This application type uses Retrieval-Augmented Generation (RAG), where the model's responses are supplemented in real-time with information from private databases. This democratizes access to institutional knowledge, breaking down data silos between departments.

Komodo Health reports transforming "weeks-long analytical workflows into actionable intelligence in minutes." When cycle time for complex analysis drops by orders of magnitude, it changes the nature of scientific work. Researchers can adopt a more agile, exploratory approach rather than pursuing only pre-defined, high-confidence hypotheses due to time constraints.

The Hard Constraints: Technical, Regulatory, and Ethical Challenges

The path to widespread adoption faces formidable obstacles that cannot be solved by model improvements alone.

The Hallucination Problem in Zero-Error Environments

LLMs generate text by predicting the next most probable word, which can produce plausible but fabricated outputs. While research shows hallucination rates can be reduced below 0.5% in some contexts, this error rate is catastrophically high for applications where an incorrect dosage, fabricated lab result, or misinterpreted genetic sequence could be lethal.

LLMs also have static knowledge bases with fixed cutoff dates, limiting utility in rapidly evolving fields. Their reasoning is correlational rather than causal, and they can fail at logic or arithmetic tasks.

Every output must be treated as a draft requiring rigorous human verification. The question becomes: at what scale can scientists realistically validate every line of AI-generated code or every claim in a 50-page regulatory document?

Regulatory Compliance: The Black Box Problem

The pharmaceutical industry is governed by GxP regulations that mandate validated, auditable, and transparent systems. How does a company prove to the FDA that an AI-generated clinical study report is valid when the process of its generation is not fully interpretable?

Regulatory frameworks for AI in drug development are still nascent, though agencies are beginning to issue draft guidance. The industry is accustomed to deterministic software where input X always produces output Y. Probabilistic LLMs break that paradigm.

Data privacy compounds this challenge. Training or fine-tuning on proprietary corporate data and patient information from clinical trials raises concerns about data leakage and HIPAA compliance. Privacy-preserving techniques like federated learning remain complex to implement at scale.

Algorithmic Bias and Health Equity

Biomedical research has historically overrepresented individuals of European ancestry while underrepresenting women and ethnic minorities. An LLM trained on this skewed corpus could generate hypotheses prioritizing biology relevant to over-studied populations, or draft trial protocols with inclusion criteria that inadvertently exclude minority groups.

This is not hypothetical speculation. The risk is documented in systematic reviews. Addressing it requires proactive auditing of training data, development of fairness metrics, and deliberate curation of diverse datasets.

The Trust Gap

The primary barrier to adoption is not technical capability but trust. Scientists, clinicians, and regulators are appropriately skeptical of deploying opaque, probabilistic systems for mission-critical, high-stakes decisions.

Anthropic's extensive partner roster is explicitly designed to bridge this gap. By co-developing solutions with Sanofi, Novo Nordisk, and the Broad Institute, the company is borrowing credibility from established institutions. These partnerships serve as third-party validation, de-risking adoption for the broader industry.

Trust is not built through benchmarks. It is built through demonstrated reliability in real-world, regulated settings over extended time periods.

Future Capabilities: From Agent Skills to Self-Driving Labs

The current architecture supports expansion toward increasingly sophisticated capabilities, though these remain speculative rather than deployed.

Next-Generation Agent Skills

Future multi-step skills could automate complex processes. A de-novo-peptide-designer skill might orchestrate workflows using generative models to design novel peptide sequences with predicted binding properties. Research in AI-driven peptide design is advancing rapidly.

A clinical-trial-site-selector skill could analyze historical trial data, demographics, and de-identified EHRs to identify optimal recruitment locations. This addresses a concrete bottleneck: 80% of trials face recruitment delays.

A multi-omic-data-integrator skill could combine genomics, transcriptomics, proteomics, and metabolomics data to generate testable hypotheses about disease mechanisms.

The development of a rich library of validated, domain-specific Agent Skills could become Anthropic's most defensible competitive advantage. While foundational LLM performance may commoditize, a proprietary "Scientific Skill Store" of GxP-compliant, reproducible workflows would be difficult to replicate.

Domain Specialization: Gene-LLMs

Models could be pre-trained on genomic and proteomic data rather than treating biological sequences as text. The Evo model was trained on 9 trillion nucleotides from across the tree of life.

Such models could predict gene expression, identify regulatory elements, model chromatin structure, and forecast mutation impacts directly from DNA sequences. This would transform the model from a natural language processing tool into a foundational model for computational biology.

Closed-Loop Laboratory Automation

The long-term vision involves closed-loop systems where AI agents design experiments, translate designs into instructions for robotic lab automation, analyze results, and refine hypotheses iteratively. Stanford's "Paper2Agent" project, which aims to turn static research papers into interactive, executable AI agents, represents an early step toward this paradigm.

Claude's architecture provides the software foundation: the model as "brain," Connectors as interfaces to digital and physical tools, and Agent Skills as procedural knowledge. Whether this vision materializes depends on solving the technical, regulatory, and trust challenges outlined above.

An Operating System for Scientific R&D?

Anthropic's Claude for Life Sciences represents a platform play rather than just a better model. The three-pillar architecture (advanced reasoning, ecosystem integration, reproducible workflows) positions Claude not as a standalone tool but as infrastructure embedded within existing scientific workflows.

The strategic approach is apparent: build trust through partnerships with established institutions, demonstrate concrete value through targeted applications like single-cell QC, and create switching costs through deep tool integrations. The extensive partner roster spanning Sanofi, Novo Nordisk, the Broad Institute, and Stanford provides both market validation and third-party credibility.

Current applications show real impact: literature reviews compressed from 18 months, code development accelerated 10x, complex genomic analysis made accessible to non-programmers, weeks-long analytical workflows reduced to minutes. These are substantial productivity gains in a data-intensive field.

However, success is contingent on addressing formidable challenges. Hallucination risks must be mitigated through RAG, fine-tuning, and mandatory human verification. GxP compliance requires developing validation frameworks for probabilistic systems, a regulatory frontier barely charted. Algorithmic bias demands proactive data curation. The trust gap must be bridged through demonstrated reliability over time, not marketing.

The platform's architecture supports future expansion into peptide design, genomics analysis, multimodal data processing, and laboratory automation. Whether Claude becomes the "operating system for R&D" that Anthropic appears to be positioning it as depends not on model performance alone, but on the company's ability to systematically address the unique technical, regulatory, and ethical demands of the life sciences.

This is Anthropic's bet: that the value of AI in science comes from integration, reproducibility, and trust built through partnerships, not from raw capability alone. The next few years will reveal whether that bet pays off.