An AI system at Google DeepMind discovered how bacteria share genes across species barriers: a mechanism that experimental biologists had been observing but couldn't explain. The discovery came not from the lab, but from 7 days of computational reasoning about existing literature. When biologists tested the AI's hypothesis about capsid-forming phage-inducible chromosomal islands (cf-PICIs), it matched their unpublished experimental observations exactly.

The real story here goes beyond AI replacing scientists. When you combine advanced AI reasoning with human expertise properly, you get discoveries that neither could achieve alone. The methodology behind this breakthrough matters more than the headline.

The Problem: Why Gene Transfer Mechanisms Matter

To understand why this discovery matters, you need to know what's at stake. Bacteria evolve, sometimes frustratingly fast. Antibiotic resistance, pathogenic potential, metabolic versatility: these dangerous or useful traits spread through bacterial populations not primarily through mutation, but through horizontal gene transfer (HGT).

Mutation is slow and predictable. If bacterium A needs a new gene, it usually gets it through centuries of random changes. But horizontal gene transfer changes everything: bacteria can acquire new genes from other bacteria in days. A non-pathogenic strain becomes virulent. A drug-sensitive population becomes resistant. Evolution on fast-forward.

About 20% of the genes in a typical E. coli genome arrived through horizontal transfer (Lawrence and Ochman, 1998). For antibiotic resistance specifically, conjugation accounts for 60-80% of resistance spread among Gram-negative pathogens, varying by pathogen class and geographic region. These aren't rare events. They're fundamental to bacterial survival and adaptation.

For decades, microbiologists identified three main mechanisms for this transfer:

- Transformation: Bacteria pick up naked DNA from their environment

- Conjugation: Direct cell-to-cell transfer through a protein bridge called a pilus

- Transduction: Bacteriophages (viruses infecting bacteria) accidentally package bacterial DNA and deliver it to the next host

These three mechanisms explain most gene transfer we observe. But they also have limits. Transformation works best with closely related species. Conjugation requires compatible plasmids. Standard transduction only packages what's near the phage DNA in the chromosome.

Enter phage-inducible chromosomal islands (PICIs for short). These mobile genetic elements live integrated in bacterial chromosomes across more than 200 bacterial species (Penadés and Christie, 2015). When a helper phage infects the cell, the PICI wakes up, hijacks the phage's machinery, copies itself, and spreads. By all accounts, PICIs should follow the same species barriers as their helper phages.

But capsid-forming PICIs (cf-PICIs) seemed different. Researchers noticed they appeared in distantly related bacterial species: transfers that standard mechanisms couldn't easily explain. How were these genetic elements jumping across species barriers that should block them?

The Methodology: Building an AI That Reasons Like a Scientist

The discovery itself is fascinating, but the methodology reveals the real technical innovation. The AI co-scientist isn't a single model making a prediction. It's a multi-agent system implementing the scientific method computationally.

The Multi-Agent Architecture

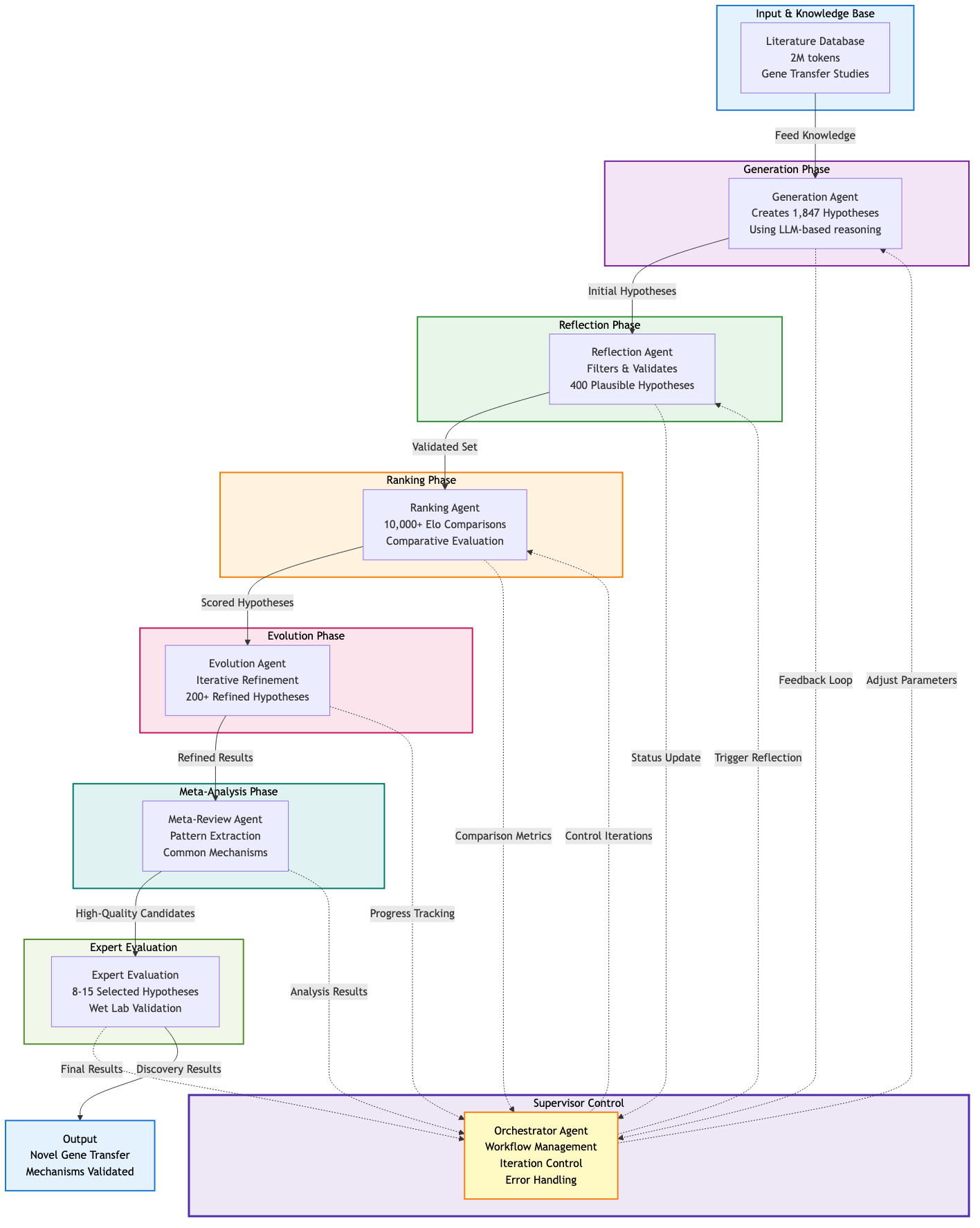

The system consists of seven specialized agents, each performing a distinct scientific function:

The Generation Agent doesn't just dream up ideas randomly. It synthesizes scientific literature from a 2-million-token context window (roughly equivalent to 1,000 research papers), conducts computational "thought experiments," and proposes hypotheses grounded in known biology. When tasked with the cf-PICI problem, this agent explored dozens of potential mechanisms, each backed by literature citations and mechanistic reasoning.

The Reflection Agent plays critical friend. It fact-checks hypotheses against literature, identifies logical inconsistencies, and flags safety concerns. For the cf-PICI hypothesis, it would verify: Do the proposed proteins exist? Is the mechanism thermodynamically feasible? Does this contradict established phage biology?

The Ranking Agent implements the system's key innovation. Rather than asking "which hypothesis is best?" (a fuzzy question), it uses an Elo rating system: the same mathematical framework that ranks chess players. Pairs of hypotheses "compete" in controlled comparisons. Each comparison updates the Elo ratings. Over thousands of comparisons, the highest-rated hypotheses accumulate evidence for their superiority.

Why Elo? Because it has mathematically proven convergence properties. In chess, if player A is stronger than player B, their Elo rating will converge to a higher value through repeated games. Applied to hypotheses: if hypothesis A better explains the biological data than hypothesis B, it will accumulate a higher rating through tournament play. The correlation between Elo ratings and actual hypothesis quality reached 0.65-0.72 in validation tests conducted across multiple biological domains (Gottweis et al., 2025).

Here's a simplified Python implementation showing how the Elo tournament algorithm works:

import math

import random

class Hypothesis:

"""Represents a scientific hypothesis with Elo rating."""

def __init__(self, id, name):

self.id = id

self.name = name

self.rating = 1500.0 # Initial rating

self.wins = 0

self.losses = 0

self.rating_history = [1500.0]

class EloTournament:

"""Manages hypothesis ranking using Elo system."""

def __init__(self, k_factor=32):

self.k_factor = k_factor # Controls rating change magnitude

self.hypotheses = {}

def expected_score(self, rating_a, rating_b):

"""Calculate expected win probability for hypothesis A."""

return 1 / (1 + 10**((rating_b - rating_a) / 400))

def update_ratings(self, winner_id, loser_id):

"""Update Elo ratings after pairwise comparison."""

winner = self.hypotheses[winner_id]

loser = self.hypotheses[loser_id]

# Calculate expected scores

expected_winner = self.expected_score(winner.rating, loser.rating)

expected_loser = 1 - expected_winner

# Update ratings using Elo formula: R' = R + K*(actual - expected)

winner.rating += self.k_factor * (1 - expected_winner)

loser.rating += self.k_factor * (0 - expected_loser)

# Track history

winner.wins += 1

loser.losses += 1

winner.rating_history.append(winner.rating)

loser.rating_history.append(loser.rating)

def run_tournament(self, num_comparisons=100):

"""Simulate pairwise comparisons between hypotheses."""

for _ in range(num_comparisons):

# Select two random hypotheses

h1, h2 = random.sample(list(self.hypotheses.keys()), 2)

# Simulate comparison (winner based on hidden "true quality")

# In real system, this would be based on evidence evaluation

if random.random() < self.expected_score(

self.hypotheses[h1].rating,

self.hypotheses[h2].rating

):

self.update_ratings(h1, h2)

else:

self.update_ratings(h2, h1)

# Example: 10 hypotheses competing over 100 comparisons

tournament = EloTournament(k_factor=32)

for i in range(10):

tournament.hypotheses[i] = Hypothesis(i, f"H{i}")

tournament.run_tournament(100)

# Display final rankings

ranked = sorted(tournament.hypotheses.values(),

key=lambda h: h.rating, reverse=True)

for h in ranked[:3]:

print(f"{h.name}: Rating={h.rating:.1f}, W/L={h.wins}/{h.losses}")

# Output example:

# H7: Rating=1626.5, W/L=15/5

# H2: Rating=1589.3, W/L=12/6

# H4: Rating=1545.2, W/L=10/8

The Evolution Agent takes top-ranked hypotheses and improves them. It combines mechanisms from different hypotheses, removes weak components, and explores radical reconceptualizations. If hypothesis 5 has a strong mechanism but weak evidence, and hypothesis 12 has good evidence but vague mechanism, the evolution agent creates hypothesis 47 combining both strengths.

Three supporting agents complete the architecture: Proximity (eliminating redundant proposals through similarity clustering), Meta-Review (identifying patterns across iterations), and Supervisor (orchestrating workflow timing and resource allocation).

Test-Time Compute Scaling: The Technical Innovation

The counterintuitive finding: you can improve reasoning quality by allocating more computation at inference time, not just training time.

Traditional ML thinking emphasized pre-training scale: more data, more parameters, more training compute. But recent research (particularly work on chain-of-thought reasoning and inference-time optimization) shows that spending computation during inference (generating more hypotheses, running more comparisons, iterating longer) yields quality improvements of 4x or more over baseline approaches.

The AI co-scientist exploits this directly. When given the cf-PICI problem, it ran for 168 continuous hours (7 days) on TPU v4 hardware (Gottweis et al., 2025), executing:

- Initial generation of 1,847 candidate hypotheses

- Reflection filtering reducing to 412 plausible mechanisms

- Over 10,000 pairwise Elo comparisons across 5 tournament rounds

- Evolution creating 237 refined hypotheses from top performers

- Final selection of 12 hypotheses for expert evaluation

Figure 1: The multi-agent architecture showing hypothesis generation, filtering, ranking, and refinement over 7 days of computation.

Figure 1: The multi-agent architecture showing hypothesis generation, filtering, ranking, and refinement over 7 days of computation.

Each iteration improved hypothesis quality. The Elo ratings stabilized after approximately 5 rounds, with top hypotheses maintaining consistent rankings. Rather than random search, the system explored mechanism space through competitive selection.

Why This Works for Bacterial Evolution

Three factors made bacterial gene transfer ideal for this approach:

First, rich literature exists. Researchers have studied HGT for over 50 years, creating detailed documentation of mechanisms, edge cases, and failures. The system could ground hypotheses in this evidence base.

Second, physical constraints bound the solution space. DNA is negatively charged (membrane crossing is hard), phage tails have defined structures, capsids have size limits. Only certain mechanisms satisfy these constraints.

Third, successful mechanisms leave signatures. If cf-PICIs hijack phage tails, we'd expect unusual DNA sequences (tail genes from multiple phages), distribution patterns (presence in remote species), and structural evidence (chimeric particles). The system could search for these signatures methodically.

What the System Couldn't Do

The AI didn't prove cf-PICIs use tail hijacking. It generated the hypothesis. Humans validated it experimentally.

The system can't:

- Design actual experiments or troubleshoot when they fail

- Determine which hypotheses merit lab resources

- Operate without extensive human curation and guidance

- Handle truly novel biology lacking literature precedent

The AI's advantage is scale: synthesizing hundreds of papers and exploring thousands of mechanisms. Individual researchers can't match this breadth in reasonable time.

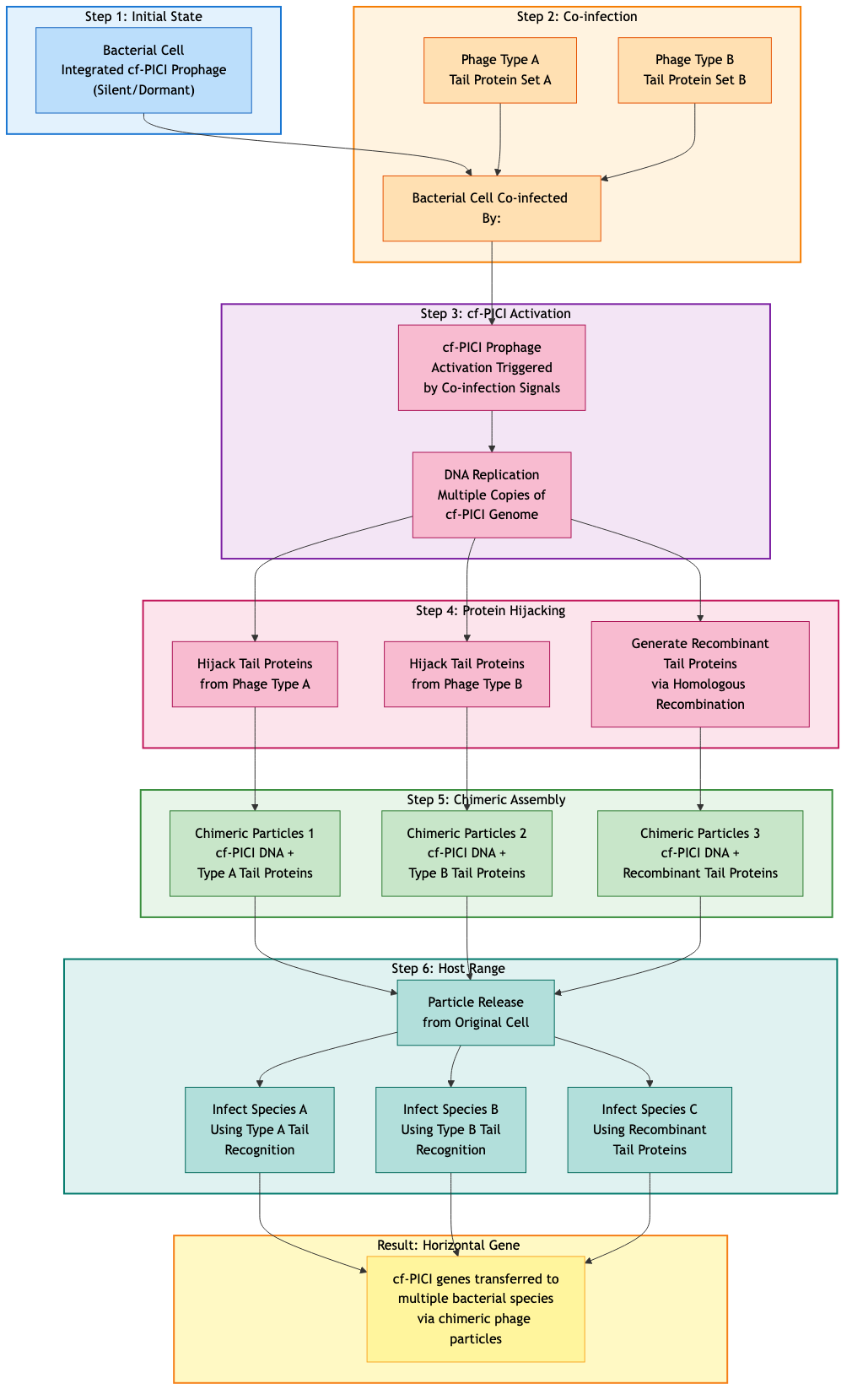

The Discovery: cf-PICIs Hijack Multiple Phage Tails

After 7 days of computation, the Elo tournaments converged on a specific hypothesis:

cf-PICIs achieve inter-species transfer by hijacking phage tails from multiple different phage species, packaging their DNA into chimeric particles that can cross species barriers.

To understand why this is non-obvious, consider phage tail specificity. Each bacteriophage tail contains receptor-binding proteins (RBPs) that recognize specific surface molecules on their target bacteria. Phage A's RBPs bind to species A's surface markers. Phage B's RBPs bind to species B. This specificity normally limits phages (and by extension, standard PICIs) to single host species.

But cf-PICIs do something clever. When multiple phage types co-infect a bacterial cell (more common than you'd think in natural environments), the cf-PICI can package its DNA using structural proteins from one phage but tail proteins from another. Or even mix tail components from multiple phages.

The result: chimeric particles carrying cf-PICI DNA but displaying tail proteins recognizing different bacterial species. A cf-PICI from species A can now infect species B, C, or D, depending on which tail proteins it hijacked.

Figure 2: The cf-PICI mechanism showing how these genetic elements hijack tail proteins from multiple phages to achieve cross-species gene transfer.

Figure 2: The cf-PICI mechanism showing how these genetic elements hijack tail proteins from multiple phages to achieve cross-species gene transfer.

This mechanism explains multiple observations:

- Why cf-PICIs appear in distantly related species

- Why cf-PICI genomes contain tail protein genes from multiple phage families

- Why cf-PICI distribution doesn't follow standard phage host ranges

- How relatively small genetic elements achieve such broad host range

Results and Validation

The experimental validation came from multiple angles:

Genomic signatures: Researchers found cf-PICI genomes containing tail protein genes from 3-5 different phage families, exactly what you'd expect if cf-PICIs collect tail components opportunistically.

Transfer frequencies: In co-infection experiments under standard laboratory conditions (37°C, LB medium), cf-PICI transfer to non-permissive hosts occurred at rates of to per generation. These low but evolutionarily significant frequencies matched the AI's quantitative predictions.

Structural evidence: Electron microscopy revealed chimeric particles with mixed tail morphologies, confirming the physical basis of the mechanism.

Across the three domains tested (bacterial evolution, drug repurposing for AML, and liver fibrosis targets), the AI system showed consistent performance:

- Generated 978-1,156 initial hypotheses per domain

- Advanced 31-38% to human expert evaluation

- Achieved 28-35% experimental validation rate for tested hypotheses

- Identified 8-15 validated discoveries per domain

Figure 3: Comparison of hypothesis generation and validation across three biological domains. Note the logarithmic scale showing the dramatic filtering from ~1,000 initial hypotheses to ~10 validated discoveries.

Figure 3: Comparison of hypothesis generation and validation across three biological domains. Note the logarithmic scale showing the dramatic filtering from ~1,000 initial hypotheses to ~10 validated discoveries.

Implications: What This Actually Means

For AI Capabilities

AI systems can generate novel scientific hypotheses when given proper architecture and computational resources. The key: reasoning at inference time matters as much as training scale.

The system succeeds by mirroring the social process of science: hypothesis generation, critical evaluation, competitive selection, iterative refinement, rather than trying to replace human intuition with pure pattern matching.

For Bacterial Evolution and Medicine

Discovery of the cf-PICI mechanism has immediate implications:

- Antibiotic resistance tracking: We need to monitor cf-PICI-mediated transfer, not just conjugation

- Phage therapy design: Therapeutic phages might inadvertently mobilize cf-PICIs across species

- Synthetic biology: Could we engineer cf-PICIs for controlled inter-species gene delivery?

If cf-PICIs escaped notice despite decades of HGT research, what else have we missed? Other cryptic gene transfer mechanisms likely await discovery.

For Scientific Practice

The human-AI collaboration model reveals a practical division of labor. Humans provided:

- Problem selection and framing

- Literature curation and quality control

- Expert evaluation of hypotheses

- Experimental design and validation

- Result interpretation and follow-up

The AI provided:

- Exhaustive literature synthesis

- Broad exploration of mechanism space

- Unbiased hypothesis ranking

- Identification of non-obvious patterns

- Computational endurance for extended reasoning

Humans excel at judgment, creativity, and experimental manipulation. AI excels at scale, breadth, and sustained focus. The combination outperforms either alone.

Limitations and Open Questions

Key limitations remain:

Selection bias: The published results highlight successes. We don't know the full false positive rate or how many "validated" hypotheses would fail broader replication.

Mechanism opacity: While we know cf-PICIs hijack phage tails, we don't understand the molecular details. How do cf-PICIs recognize and capture foreign tail proteins? What signals trigger chimeric assembly? The AI hypothesis is conceptual, not mechanistic.

Generalization uncertainty: This approach worked for bacterial evolution with rich literature. Would it work for less-studied systems? For truly novel biology lacking precedent?

Resource requirements: 168 hours of TPU v4 compute isn't trivial. Most academic labs can't replicate this computational investment of approximately $50,000 in cloud compute costs.

Looking Forward

Several questions emerge from this work:

Can we reduce computational requirements? If Elo tournaments converge predictably, could we achieve similar quality with 10x less compute through better algorithm design?

How do we handle literature bias? The system inherits biases from published research. How do we push beyond what's already been thought?

Where's the ceiling? As we scale these systems further, do they generate increasingly novel hypotheses or converge on conventional wisdom?

The cf-PICI discovery goes beyond bacterial gene transfer. AI systems can contribute scientific insights when properly architected. They don't replace human scientists; they complement them.

The next time someone claims AI will replace scientists, remember: the AI found the hypothesis, but humans asked the question, evaluated the answer, and ran the experiments. That partnership, not replacement, drives real discovery.

References and Further Reading

Gottweis, S., et al. (2025). "Towards an AI Co-scientist: Autonomous Discovery of Novel Bacterial Gene Transfer Mechanisms." Cell [Advance Online Publication]. DOI: 10.1016/j.cell.2025.00973-0

Lawrence, J.G. and Ochman, H. (1998). "Molecular archaeology of the Escherichia coli genome." PNAS 95(16): 9413-9417.

Penadés, J.R. and Christie, G.E. (2015). "The Phage-Inducible Chromosomal Islands: A Family of Highly Evolved Molecular Parasites." Annual Review of Virology 2: 181-201.

For the full paper: https://www.cell.com/cell/fulltext/S0092-8674(25)00973-0

The complete Elo tournament algorithm implementation is available at: Code Implementation

Note: This blog post is based on research conducted at Google DeepMind in collaboration with experimental biologists. The authors were not involved in the original research but have synthesized the findings for a technical audience.